Here U and V are

matrices that obey the orthogonality relations and W is an

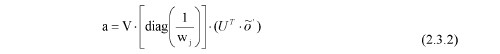

diagonal matrix, which contains rank k real positive singular values (wk) arranged in decreasing magnitude. Because the covariance matrix C is a square symmetric matrix, CT =VWUT = UWVT = C. This proves that the left and right singular vector U and V are equal. Therefore, the method used can also be called Principal Component Analysis (PCA). The decomposition can be used to obtain the regression coefficients:

The pointwise regression model using the SVD method removes the singular matrix problem that cannot be entirely solved with the Gauss-Jordan elimination method. Moreover, solving above equation with zeroing the small singular values gives better regression coefficients than the SVD solution where the small wj values are left as nonzero. If the small wj values are retained as nonzero, it usually makes the residual

larger (Press et al., 1992) This means that if we have a situation where most of the wj singular values of a matrix C are small, then C will be better approximated by only a few large wj singular values.

matrices that obey the orthogonality relations and W is an

matrices that obey the orthogonality relations and W is an  diagonal matrix, which contains rank k real positive singular values (wk) arranged in decreasing magnitude. Because the covariance matrix C is a square symmetric matrix, CT =VWUT = UWVT = C. This proves that the left and right singular vector U and V are equal. Therefore, the method used can also be called Principal Component Analysis (PCA). The decomposition can be used to obtain the regression coefficients:

diagonal matrix, which contains rank k real positive singular values (wk) arranged in decreasing magnitude. Because the covariance matrix C is a square symmetric matrix, CT =VWUT = UWVT = C. This proves that the left and right singular vector U and V are equal. Therefore, the method used can also be called Principal Component Analysis (PCA). The decomposition can be used to obtain the regression coefficients:

larger (Press et al., 1992) This means that if we have a situation where most of the wj singular values of a matrix C are small, then C will be better approximated by only a few large wj singular values.

larger (Press et al., 1992) This means that if we have a situation where most of the wj singular values of a matrix C are small, then C will be better approximated by only a few large wj singular values.